Intro: Analsis of Turbofan ReduceNode && Inline

Reduce-node Analysis

初始化GraphReducer

1

2

3//graph-reducer.cc:28

GraphReducer::GraphReducer(...){...}添加reducer器,通过栈结构实现

1

2

3

4

5//graph-reducer.cc:43

void GraphReducer::AddReducer(Reducer* reducer) {

reducers_.push_back(reducer);

}ReduceGraph 入口–Backward DFS

1

2

3//graph-reducer.cc:78

void GraphReducer::ReduceGraph() { ReduceNode(graph()->end()); }ReduceNode 主逻辑

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30//graph-reducer.cc:48

void GraphReducer::ReduceNode(Node* node) {

DCHECK(stack_.empty());

DCHECK(revisit_.empty());

Push(node);

for (;;) {

if (!stack_.empty()) {

// Process the node on the top of the stack, potentially pushing more or

// popping the node off the stack.

ReduceTop();

} else if (!revisit_.empty()) {

// If the stack becomes empty, revisit any nodes in the revisit queue.

Node* const node = revisit_.front();

revisit_.pop();

if (state_.Get(node) == State::kRevisit) {

// state can change while in queue.

Push(node);

}

} else {

// Run all finalizers.

for (Reducer* const reducer : reducers_) reducer->Finalize();

// Check if we have new nodes to revisit.

if (revisit_.empty()) break;

}

}

DCHECK(revisit_.empty());

DCHECK(stack_.empty());

}进入 Reduce Top,紧接着进入 Reduce

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46//graph-reducer.cc:82

Reduction GraphReducer::Reduce(Node* const node) {

auto skip = reducers_.end();

for (auto i = reducers_.begin(); i != reducers_.end();) {

if (i != skip) {

Reduction reduction = (*i)->Reduce(node);

if (!reduction.Changed()) {

//如果当前 reducer 没有 reduce 该 node,则跳到下一个reducer

// No change from this reducer.

} else if (reduction.replacement() == node) {

//如果当前 reduer 针对该 node 进行了状态更新(非替换),则重新遍历所有的reducers

// {replacement} == {node} represents an in-place reduction. Rerun

// all the other reducers for this node, as now there may be more

// opportunities for reduction.

if (FLAG_trace_turbo_reduction) {

StdoutStream{} << "- In-place update of " << *node << " by reducer "

<< (*i)->reducer_name() << std::endl;

}

skip = i;

i = reducers_.begin();

continue;

} else {

// 如果当前reducer 替换了该 node 为其他 node,则继续跳到下一个 reducer

// {node} was replaced by another node.

if (FLAG_trace_turbo_reduction) {

StdoutStream{} << "- Replacement of " << *node << " with "

<< *(reduction.replacement()) << " by reducer "

<< (*i)->reducer_name() << std::endl;

}

return reduction;

}

}

++i;

}

if (skip == reducers_.end()) {

// No change from any reducer.

return Reducer::NoChange();

}

// At least one reducer did some in-place reduction.

return Reducer::Changed(node);

}进入特定 pass 的Reduce 过程,例如 DeadCodeElimination:

1

2

3

4

5

6

7

8

9

10

11//dead-code-elimination.cc:48

Reduction DeadCodeElimination::Reduce(Node* node) {

DisallowHeapAccess no_heap_access;

switch (node->opcode()) {

case IrOpcode::kEnd:

return ReduceEnd(node);

...

}

UNREACHABLE();

}

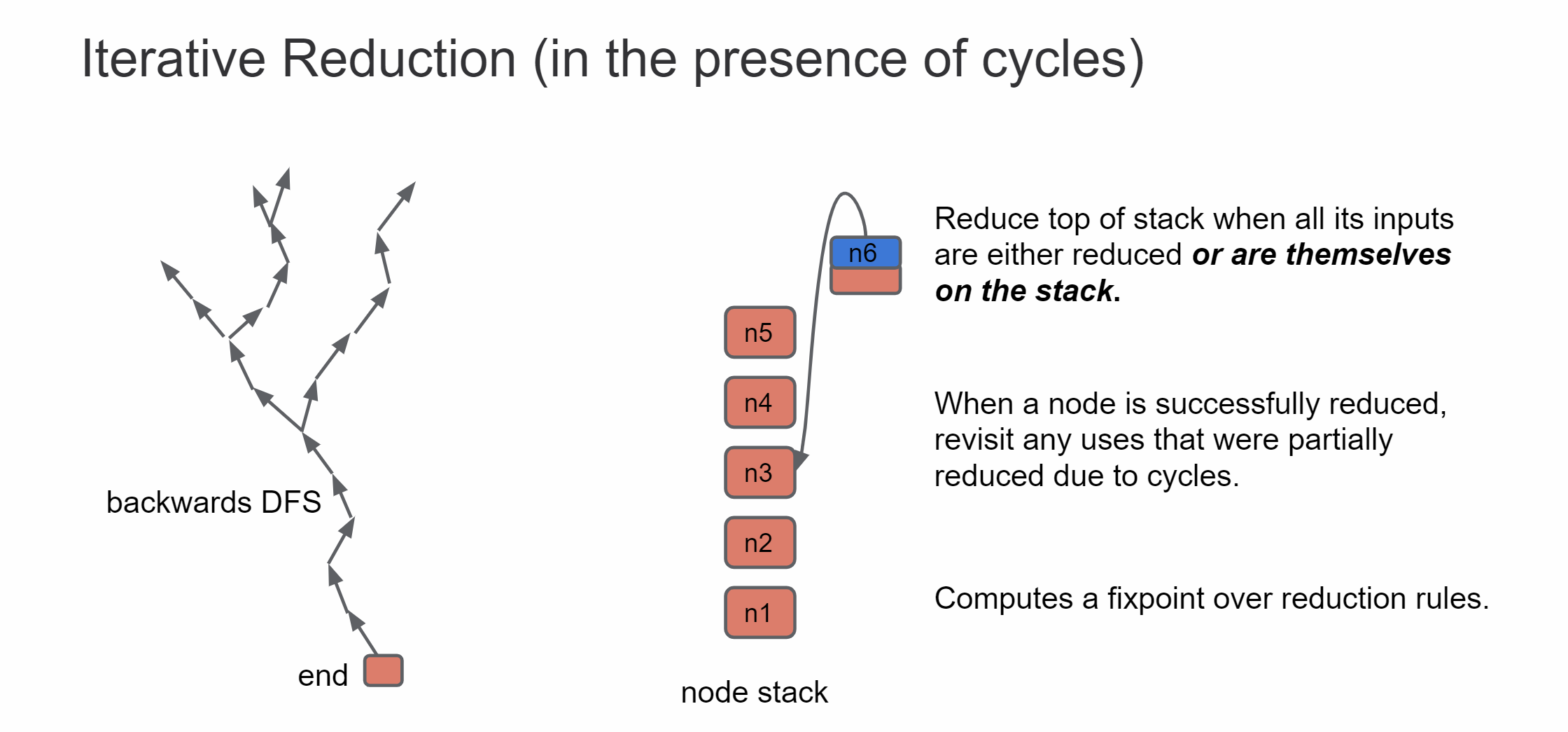

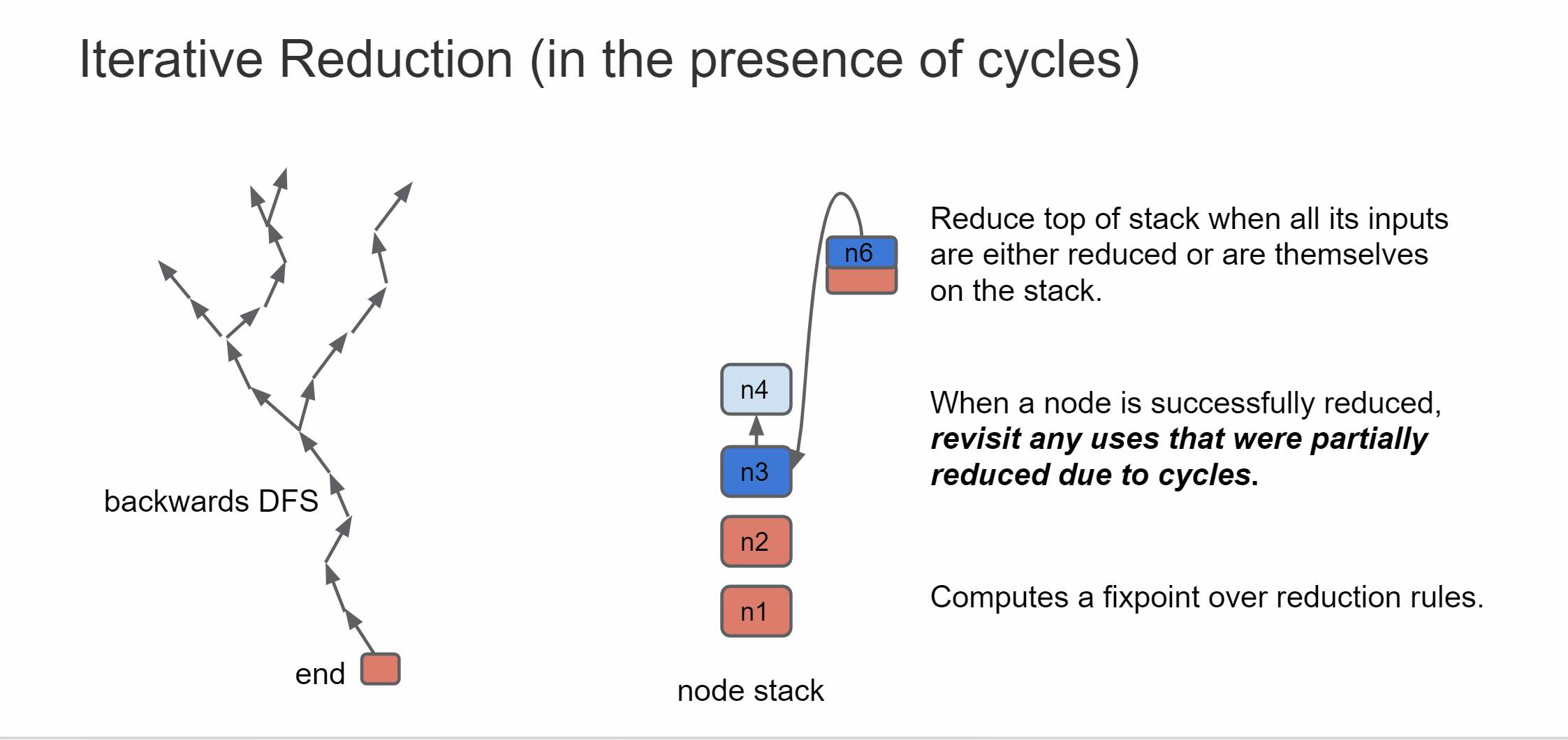

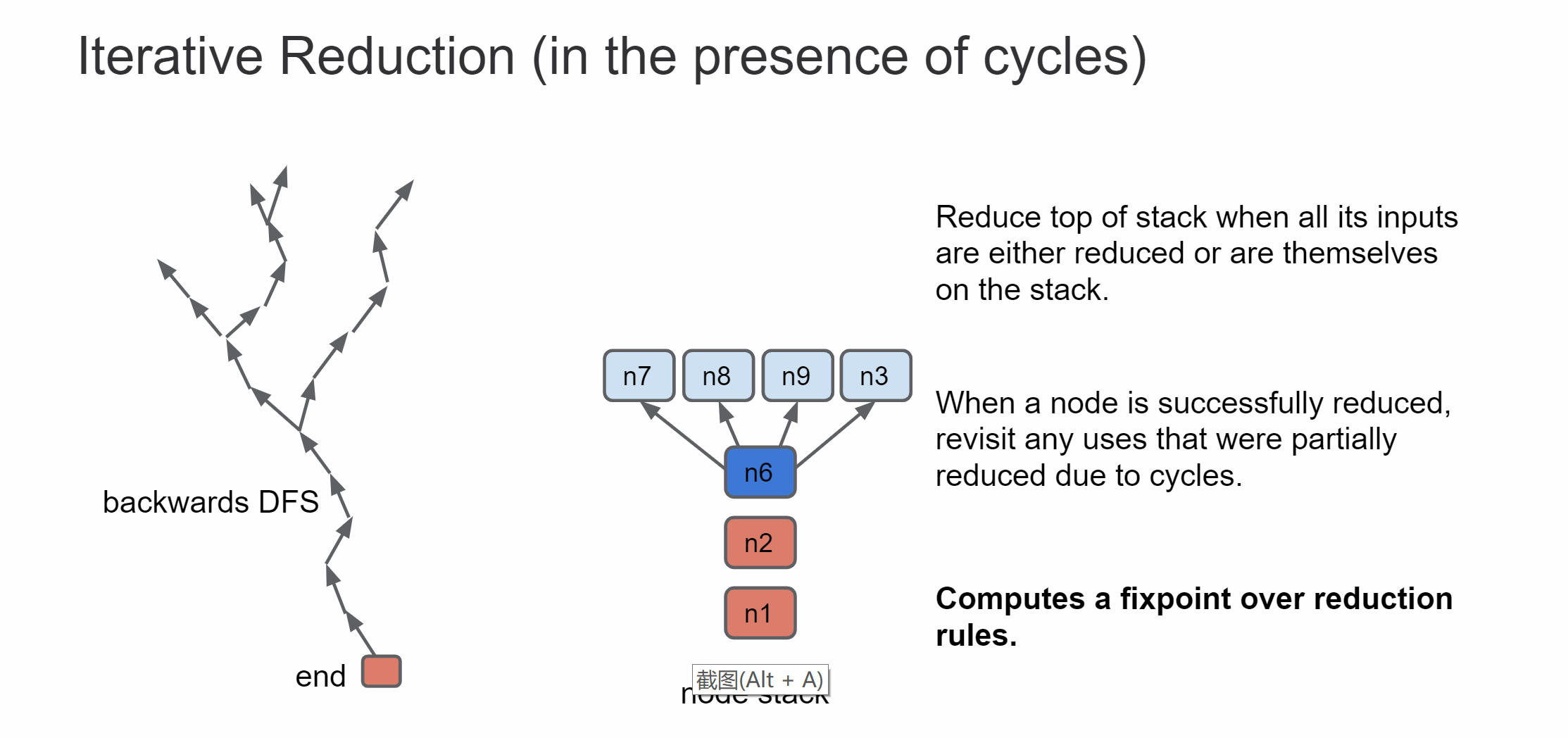

【+】 stack 是实现 backward DFS 的基本栈结构,Reducer为栈式自动机

【+】 revisit 是需要重复 reduce 的 node 列表,通常是reduced 节点的 use

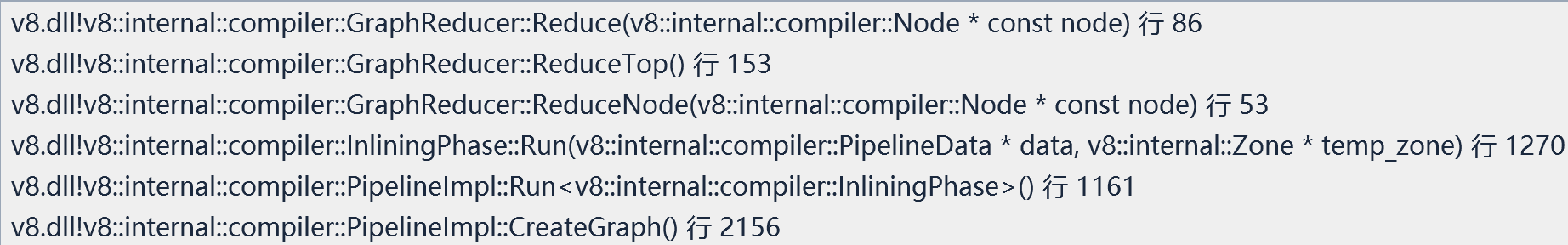

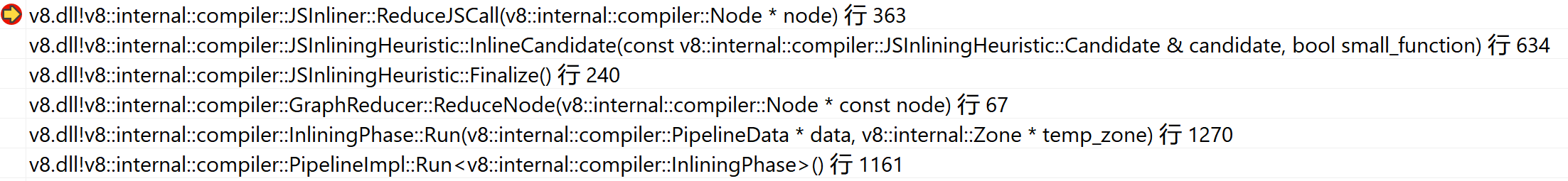

【+】 Reduce Top 实现具体的 Node Reduce;接着循环遍历各 pass 的 Reducer。调用层次:

Reduce-node Algorithm

- 使用栈结构的递归

- 根节点为 graph->end()

- 从下向上 reduce

- 如果节点之间存在循环调用,比如:

则:

则: 因此在Reduce n3 结束时:

因此在Reduce n3 结束时: 重新Reduce n6(n3->use=n6,push n6)

重新Reduce n6(n3->use=n6,push n6)

- 需要维护一个 revisit 栈

- 行为:A 节点优化完成后将 A 的 use 节点 push stack

JSInline

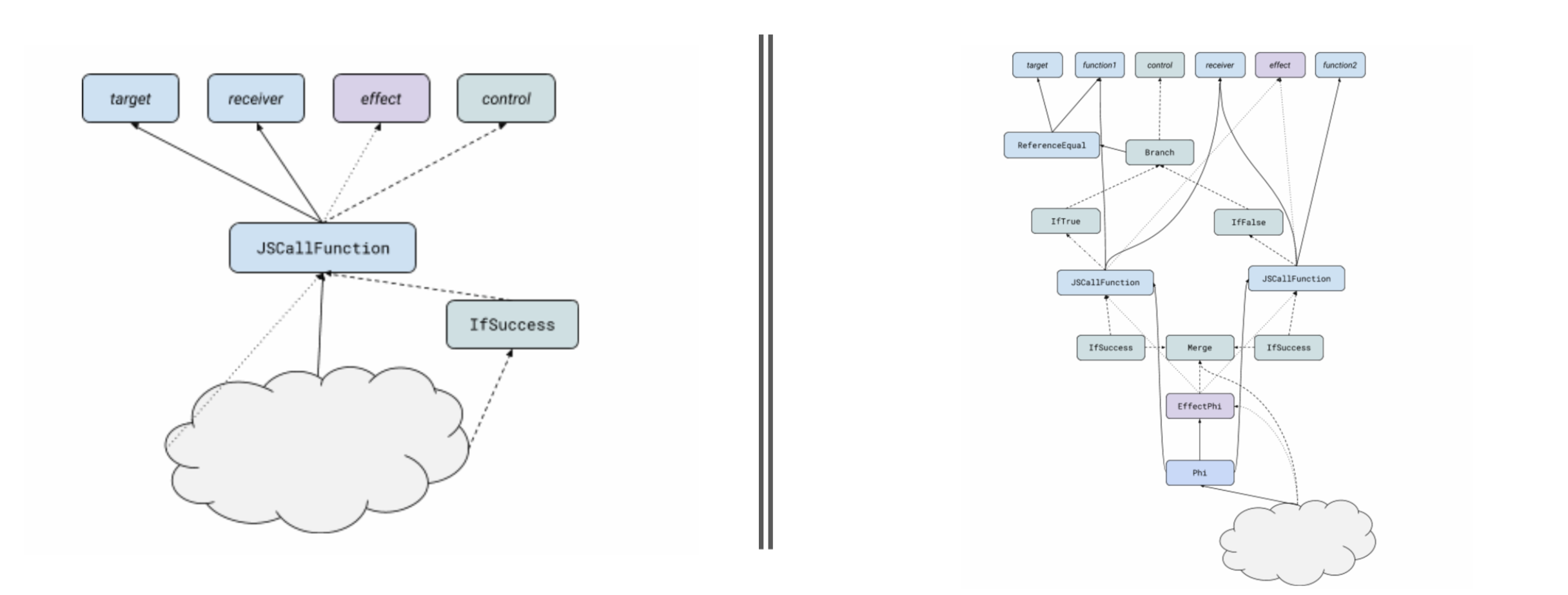

当为一个函数A生成优化代码的时候,Turbofan 可以为 A 函数中调用的其他函数进行 Inline。但是仅仅内联用户的代码是远远不够的,编译器还需要对 buildin 函数进行内联,buildin的内联和常规的内联是分开处理的。

General Inline

JSInliner 类描述了常规内联的行为,它有一个前驱 JSInliningHeuristic 用来决定内联策略。核心的内联器其实很简单:它针对 JSCallFunction 和 JSCallConstruct 进行处理,用 BytecodeGraphBuilder 根据 Interpreter 生成的 Bytecode 为 callee 直接生成一个子图,最终将 Call 节点替换为该子图。内联既可以内联一个callee,也可以多态内联,内联多个 callee 的 phi。

多态 Inline

基本的逻辑和单态差不多,但是需要处理多个目标,而且再决定内联某个多态节点时候,我们需要准确的分离出我们要 inline 的 target。heuristic目前只考虑所有节点都已经知道的多态,例如callee为phi类型而且接受的输入为指向 JSFunctions 的 HeapConstant。而且,至少有一个 candidate 会被选中进行 Inline。

所以当 heuristics 让我们去Inline一个多态节点的时候,我们第一步要做的就是拓展JSCallFunction/JSCallConstruct 为众多单节点 call 的子图,然后对每一个单独分离完毕的 target 进行单态 Inline 。

核心的代码在 JSInliningHeuristic::CreateOrReuseDispatch( js-inlining-heuristic.cc#L549 )

Builtin Inlining

Builtin 的 Inline 和上面讲述的 General Inline 有些许不同,原因有下:

- 首先 Builtin 函数没有字节码,Turbofan 直接 Call Stub。

- 其次,若非一个内联是有类似于没有 check 的 fast 途径,内联不如直接 Call Stub 来的快。

Inline 策略

TurboFan 将会在两个地方进行 Builtin 的内联:

- inlining/native context specialization pass: JSCallReducer

- typed lowering pass: JSBuiltinReducer

从 pass 运行的先后就可以得到: JSBuiltinReducer 处理的 Inline 必须在 Type Pass 后面,也就是需要采集 Type Information;JSCallReducer 处理的则稍早,处理一些类型严格的 Builtin 比如 Array.prototype.map。

Builtin Inlining

JSInliningHeuristic

现讨论单个 pass:JSInliningHeuristic的 Reduce 过程

调用堆栈:

JSInliningHeuristic::Reduce

1 | //js-inlining-heuristic.cc:89 |

JSInliningHeuristic::CollectFunctions

1 | //js-inlining-heuristic.cc:35 |

1 | BytecodeArray SharedFunctionInfo::GetBytecodeArray() const { |

JSInliningHeuristic::InlineCandidate

1 | //js-inlining-heuristic.cc:628 |

JSInliner::ReduceJSCall

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268// js-inlining.cc:363

Reduction JSInliner::ReduceJSCall(Node* node) {

DCHECK(IrOpcode::IsInlineeOpcode(node->opcode()));

JSCallAccessor call(node);

// Determine the call target.

base::Optional<SharedFunctionInfoRef> shared_info(DetermineCallTarget(node));

if (!shared_info.has_value()) return NoChange();

DCHECK(shared_info->IsInlineable());

// Constructor must be constructable.

if (node->opcode() == IrOpcode::kJSConstruct &&

!IsConstructable(shared_info->kind())) {

TRACE("Not inlining " << *shared_info << " into " << info_->shared_info()

<< " because constructor is not constructable.");

return NoChange();

}

// Inline node 必须是 kJSCall 或 IsClassConstructor

// Class constructors are callable, but [[Call]] will raise an exception.

// See ES6 section 9.2.1 [[Call]] ( thisArgument, argumentsList ).

if (node->opcode() == IrOpcode::kJSCall &&

IsClassConstructor(shared_info->kind())) {

TRACE("Not inlining " << *shared_info << " into " << info_->shared_info()

<< " because callee is a class constructor.");

return NoChange();

}

// To ensure inlining always terminates, we have an upper limit on inlining

// the nested calls.

int nesting_level = 0;

for (Node* frame_state = call.frame_state();

frame_state->opcode() == IrOpcode::kFrameState;

frame_state = frame_state->InputAt(kFrameStateOuterStateInput)) {

nesting_level++;

if (nesting_level > kMaxDepthForInlining) {

TRACE("Not inlining "

<< *shared_info << " into " << info_->shared_info()

<< " because call has exceeded the maximum depth for function "

"inlining.");

return NoChange();

}

}

Node* exception_target = nullptr;

NodeProperties::IsExceptionalCall(node, &exception_target);

// JSInliningHeuristic has already filtered candidates without a

// BytecodeArray by calling SharedFunctionInfoRef::IsInlineable. For the ones

// passing the IsInlineable check, The broker holds a reference to the

// bytecode array, which prevents it from getting flushed.

// Therefore, the following check should always hold true.

CHECK(shared_info.value().is_compiled());

if (!FLAG_concurrent_inlining && info_->is_source_positions_enabled()) {

SharedFunctionInfo::EnsureSourcePositionsAvailable(isolate(),

shared_info->object());

}

TRACE("Inlining " << *shared_info << " into " << info_->shared_info()

<< ((exception_target != nullptr) ? " (inside try-block)"

: ""));

// Determine the targets feedback vector and its context.

Node* context;

FeedbackVectorRef feedback_vector = DetermineCallContext(node, context);

if (FLAG_concurrent_inlining) {

if (!shared_info.value().IsSerializedForCompilation(feedback_vector)) {

TRACE("Missed opportunity to inline a function ("

<< *shared_info << " with " << feedback_vector << ")");

return NoChange();

}

}

// [+] 现在开始真正的inlining工作

// ----------------------------------------------------------------

// After this point, we've made a decision to inline this function.

// We shall not bailout from inlining if we got here.

BytecodeArrayRef bytecode_array = shared_info.value().GetBytecodeArray();

// Remember that we inlined this function.

int inlining_id = info_->AddInlinedFunction(

shared_info.value().object(), bytecode_array.object(),

source_positions_->GetSourcePosition(node));

// 建立子图

// Create the subgraph for the inlinee.

Node* start;

Node* end;

{

// Run the BytecodeGraphBuilder to create the subgraph.

Graph::SubgraphScope scope(graph());

BytecodeGraphBuilderFlags flags(

BytecodeGraphBuilderFlag::kSkipFirstStackCheck);

if (info_->is_analyze_environment_liveness()) {

flags |= BytecodeGraphBuilderFlag::kAnalyzeEnvironmentLiveness;

}

if (info_->is_bailout_on_uninitialized()) {

flags |= BytecodeGraphBuilderFlag::kBailoutOnUninitialized;

}

{

// TODO(mslekova): Remove the following once bytecode graph builder

// is brokerized. Also, remove the context argument from

// BuildGraphFromBytecode and extract it from the broker there.

AllowHandleDereference allow_handle_deref;

AllowHandleAllocation allow_handle_alloc;

AllowHeapAllocation allow_heap_alloc;

AllowCodeDependencyChange allow_code_dep_change;

CallFrequency frequency = call.frequency();

Handle<Context> native_context =

handle(info_->native_context(), isolate());

// ----------------------------------------------------------------

// BuildGraph!

// ----------------------------------------------------------------

BuildGraphFromBytecode(broker(), zone(), bytecode_array.object(),

shared_info.value().object(),

feedback_vector.object(), BailoutId::None(),

jsgraph(), frequency, source_positions_,

native_context, inlining_id, flags);

}

// Extract the inlinee start/end nodes.

start = graph()->start();

end = graph()->end();

}

// If we are inlining into a surrounding exception handler, we collect all

// potentially throwing nodes within the inlinee that are not handled locally

// by the inlinee itself. They are later wired into the surrounding handler.

NodeVector uncaught_subcalls(local_zone_);

if (exception_target != nullptr) {

// Find all uncaught 'calls' in the inlinee.

AllNodes inlined_nodes(local_zone_, end, graph());

for (Node* subnode : inlined_nodes.reachable) {

// Every possibly throwing node should get {IfSuccess} and {IfException}

// projections, unless there already is local exception handling.

if (subnode->op()->HasProperty(Operator::kNoThrow)) continue;

if (!NodeProperties::IsExceptionalCall(subnode)) {

DCHECK_EQ(2, subnode->op()->ControlOutputCount());

uncaught_subcalls.push_back(subnode);

}

}

}

Node* frame_state = call.frame_state();

Node* new_target = jsgraph()->UndefinedConstant();

// Inline {JSConstruct} requires some additional magic.

if (node->opcode() == IrOpcode::kJSConstruct) {

// Swizzle the inputs of the {JSConstruct} node to look like inputs to a

// normal {JSCall} node so that the rest of the inlining machinery

// behaves as if we were dealing with a regular function invocation.

new_target = call.new_target(); // Retrieve new target value input.

node->RemoveInput(call.formal_arguments() + 1); // Drop new target.

node->InsertInput(graph()->zone(), 1, new_target);

// Insert nodes around the call that model the behavior required for a

// constructor dispatch (allocate implicit receiver and check return value).

// This models the behavior usually accomplished by our {JSConstructStub}.

// Note that the context has to be the callers context (input to call node).

// Also note that by splitting off the {JSCreate} piece of the constructor

// call, we create an observable deoptimization point after the receiver

// instantiation but before the invocation (i.e. inside {JSConstructStub}

// where execution continues at {construct_stub_create_deopt_pc_offset}).

Node* receiver = jsgraph()->TheHoleConstant(); // Implicit receiver.

Node* context = NodeProperties::GetContextInput(node);

if (NeedsImplicitReceiver(shared_info.value())) {

Node* effect = NodeProperties::GetEffectInput(node);

Node* control = NodeProperties::GetControlInput(node);

Node* frame_state_inside = CreateArtificialFrameState(

node, frame_state, call.formal_arguments(),

BailoutId::ConstructStubCreate(), FrameStateType::kConstructStub,

shared_info.value(), context);

Node* create =

graph()->NewNode(javascript()->Create(), call.target(), new_target,

context, frame_state_inside, effect, control);

uncaught_subcalls.push_back(create); // Adds {IfSuccess} & {IfException}.

NodeProperties::ReplaceControlInput(node, create);

NodeProperties::ReplaceEffectInput(node, create);

// Placeholder to hold {node}'s value dependencies while {node} is

// replaced.

Node* dummy = graph()->NewNode(common()->Dead());

NodeProperties::ReplaceUses(node, dummy, node, node, node);

Node* result;

// Insert a check of the return value to determine whether the return

// value or the implicit receiver should be selected as a result of the

// call.

Node* check = graph()->NewNode(simplified()->ObjectIsReceiver(), node);

result =

graph()->NewNode(common()->Select(MachineRepresentation::kTagged),

check, node, create);

receiver = create; // The implicit receiver.

ReplaceWithValue(dummy, result);

} else if (IsDerivedConstructor(shared_info->kind())) {

Node* node_success =

NodeProperties::FindSuccessfulControlProjection(node);

Node* is_receiver =

graph()->NewNode(simplified()->ObjectIsReceiver(), node);

Node* branch_is_receiver =

graph()->NewNode(common()->Branch(), is_receiver, node_success);

Node* branch_is_receiver_true =

graph()->NewNode(common()->IfTrue(), branch_is_receiver);

Node* branch_is_receiver_false =

graph()->NewNode(common()->IfFalse(), branch_is_receiver);

branch_is_receiver_false =

graph()->NewNode(javascript()->CallRuntime(

Runtime::kThrowConstructorReturnedNonObject),

context, NodeProperties::GetFrameStateInput(node),

node, branch_is_receiver_false);

uncaught_subcalls.push_back(branch_is_receiver_false);

branch_is_receiver_false =

graph()->NewNode(common()->Throw(), branch_is_receiver_false,

branch_is_receiver_false);

NodeProperties::MergeControlToEnd(graph(), common(),

branch_is_receiver_false);

ReplaceWithValue(node_success, node_success, node_success,

branch_is_receiver_true);

// Fix input destroyed by the above {ReplaceWithValue} call.

NodeProperties::ReplaceControlInput(branch_is_receiver, node_success, 0);

}

node->ReplaceInput(1, receiver);

// Insert a construct stub frame into the chain of frame states. This will

// reconstruct the proper frame when deoptimizing within the constructor.

frame_state = CreateArtificialFrameState(

node, frame_state, call.formal_arguments(),

BailoutId::ConstructStubInvoke(), FrameStateType::kConstructStub,

shared_info.value(), context);

}

// Insert a JSConvertReceiver node for sloppy callees. Note that the context

// passed into this node has to be the callees context (loaded above).

if (node->opcode() == IrOpcode::kJSCall &&

is_sloppy(shared_info->language_mode()) && !shared_info->native()) {

Node* effect = NodeProperties::GetEffectInput(node);

if (NodeProperties::CanBePrimitive(broker(), call.receiver(), effect)) {

CallParameters const& p = CallParametersOf(node->op());

Node* global_proxy =

jsgraph()->Constant(broker()->native_context().global_proxy_object());

Node* receiver = effect =

graph()->NewNode(simplified()->ConvertReceiver(p.convert_mode()),

call.receiver(), global_proxy, effect, start);

NodeProperties::ReplaceValueInput(node, receiver, 1);

NodeProperties::ReplaceEffectInput(node, effect);

}

}

// Insert argument adaptor frame if required. The callees formal parameter

// count (i.e. value outputs of start node minus target, receiver, new target,

// arguments count and context) have to match the number of arguments passed

// to the call.

int parameter_count = shared_info->internal_formal_parameter_count();

DCHECK_EQ(parameter_count, start->op()->ValueOutputCount() - 5);

if (call.formal_arguments() != parameter_count) {

frame_state = CreateArtificialFrameState(

node, frame_state, call.formal_arguments(), BailoutId::None(),

FrameStateType::kArgumentsAdaptor, shared_info.value());

}

return InlineCall(node, new_target, context, frame_state, start, end,

exception_target, uncaught_subcalls);

}

Reference

【+】TurboFan Inlining Heuristics

TODO

- Builtin Inline 的源码分析